The Kirkpatrick Evaluation Model is a framework for assessing the effectiveness of a training program, be it online, blended, or face-to-face. Keep reading to discover:

- What is the Kirkpatrick model and its intricate uses?

- The four levels of the framework

- Pros and cons of implementing the Kirkpatrick evaluation model

What is the Kirkpatrick Model?

You’ve just rolled out a new employee training course. It’s dynamic; you’ve used multimedia, instructional design models, and best practices to create a pretty awesome program. It’s time to celebrate, right?

Wrong.

All the beautiful slides in the world mean nothing to L&D leaders and senior management. Instead, they just want to know one thing: How effective was the training?

Did it solve the performance issue in question? Has it led to a behavior change? If the answer is no, the training fails.

So, how can we, as instructional designers, evaluate the impact of our training initiatives?

For over seven decades, the Kirkpatrick Evaluation Model has been the framework of choice. And while it offers a step-by-step process for determining effectiveness, it’s very easy to misuse this model. To help you get it right, we’ve created this hands-on guide.

The Kirkpatrick Evaluation Model was first developed in 1954 by Donald Kirkpatrick during his Ph.D. dissertation. And it has now become the most commonly used evaluation method amongst instructional designers.

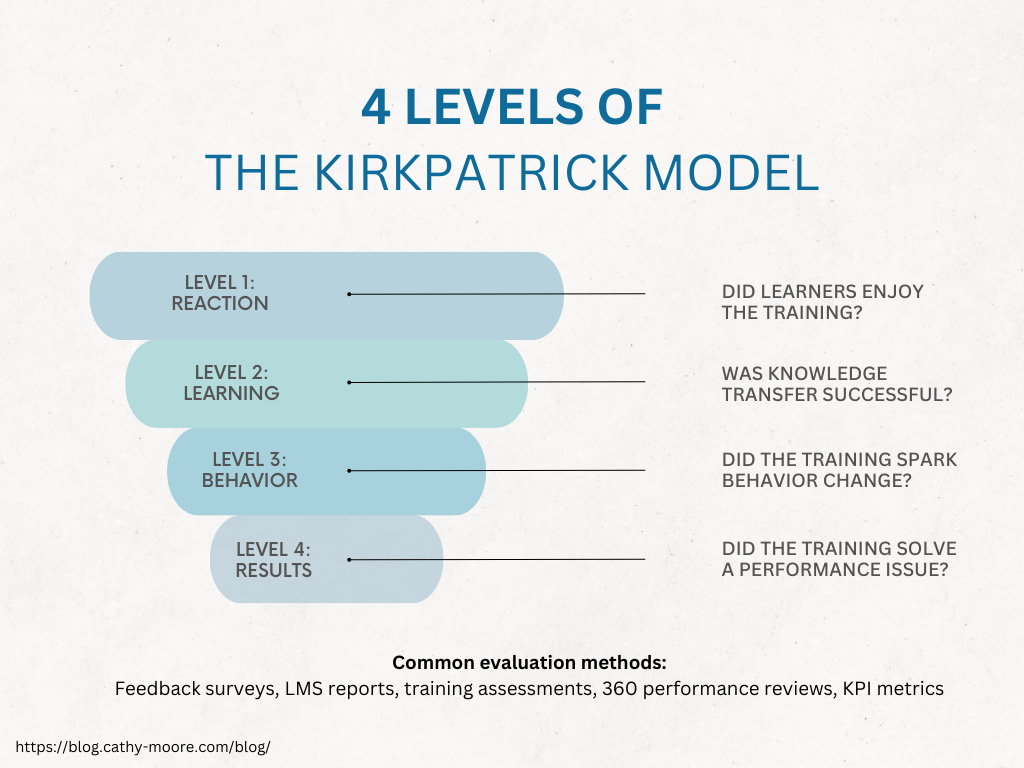

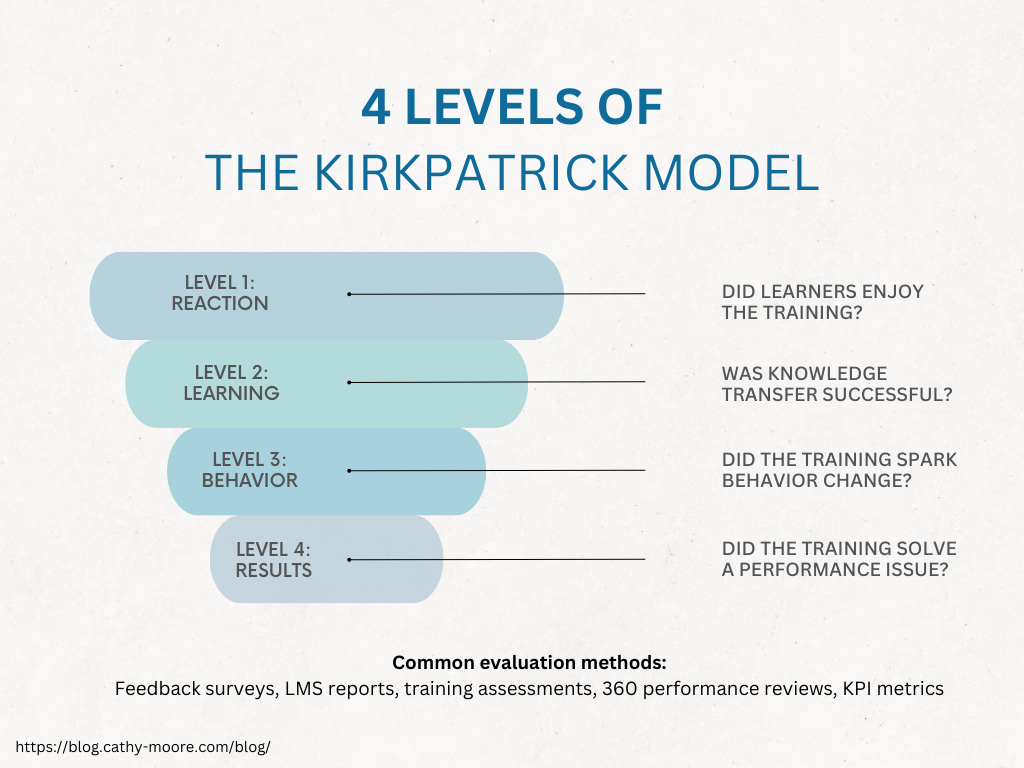

The model consists of four levels or stages: reaction, learning, behavior/impact, and results.

Kirkpatrick believed that training programs should be evaluated on these four levels. And if you followed these steps in order, you could accurately evaluate the effectiveness of an educational program.

However, in recent years, famous instructional designers have suggested a different way to use the framework.

Namely, starting with level 4 (results) and working backwards to level 1 (reaction).

This new approach to an old model actually makes a lot of sense because it enables you to align the training with organizational goals.

Some instructional design experts, such as Jack Phillips, even argue in favor of adding a fifth level, ROI. This is known as the Phillips ROI Methodology.

Let’s take a closer look at the four stages of Kirkpatrick’s framework.

The four levels of the Kirkpatrick Model

According to Kirkpatrick, all training programs should undergo a thorough, four-part evaluation process. Only then can you be sure that they are effective and up to the task.

Here are the four levels of the Kirkpatrick Evaluation Model.

Level 1: Reaction

Reaction evaluates how engaging and relevant learners find a training course. In other words, learners’ reactions to the training.

This is relatively easy to measure. Simply ask learners to complete a quick survey after they finish the course.

The survey should aim to find answers to the following questions:

- Does the training meet learners’ expectations?

- What are the biggest strengths and weaknesses in the learners’ eyes?

- How useful do participants find the training?

- How do participants rate the overall quality of the program?

These questions enable you to gauge learners’ overall reaction to the course. This gives you a general idea of its success and effectiveness.

Method: Add a survey at the end of your training program (Likert scales work well here, as they allow learners to give a more precise idea of their opinions). You can add it at the end of the course, as a separate survey in your LMS, or send it by email upon course completion.

LMS reports can also give insight into how employees receive online training. For example, low completion rates and/or pass rates usually indicate low learner satisfaction.

Level 2: Learning

The learning stage assesses how successfully learners acquire the new knowledge, skills, or behaviors that the training aims to teach.

What you’re really doing at this level is determining if employees understand the training and can apply the new knowledge.

Common ways to measure this are hands-on assignments, post-training tests, or interview-style evaluations to demonstrate the person has learned a new skill.

Method: Include practical assignments, assessments, post-training follow-up quizzes, and other evaluation exercises that demonstrate learners’ ability to apply new skills.

If you really want to know how much participants learned from the training, you can also have them take a pre-course quiz. That way, you can compare those results with the post-training assessment to see how much learning took place.

Level 3: Behavior/impact

The third level measures if the training has inspired a behavior change. Are employees applying the newly acquired skills in their jobs? To what extent?

We’re all familiar with the forgetting curve, which shows that learners tend to lose newly acquired information in as little as 24 hours. Unless, of course, they use this new knowledge right away.

When you evaluate behavior, you gain an understanding of how successfully employees can take what they’ve learned and use it to enhance their job performance.

Method: The most effective way to evaluate behavior changes amongst learners is to conduct 360-degree feedback reviews. These results will indicate if the training has led to a behavior change and what impact it has had on performance metrics.

Level 4: Results

This level of evaluation really aims to identify if learners continue to apply new knowledge when they’re back on the job.

Let’s say you’re evaluating the effectiveness of your leadership program. Are managers continuing to apply the techniques covered in the training?

Method: Employee surveys and pulse checks can offer insight into the answer to this question. So can related metrics like staff retention rates.

This is the most important stage of training evaluation and the most complex to measure. But it’s here that you really get an idea of the impact your training program has had on the business.

Pros and cons

There’s a good reason why the Kirkpatrick Model has remained relevant after all this time. But there are also some notable limitations that have caused some instructional designers to look for alternatives or modifications.

We break down the strengths and weaknesses of Kirkpatrick’s framework below.

Pros

There’s no doubt that Kirkpatrick’s evaluation framework is flexible and easily adaptable to different learning modalities. It works just as well for online, blended, and in-person training environments.

What’s more, it offers instructional designers a structured process to follow when assessing training impact.

The four levels of the model provide insight into the overall performance of training in your company, ensuring you hone in on what matters. Did the training encourage the desired behavior change?

Cons

The Kirkpatrick Model has some limitations. One of the biggest drawbacks is that it’s time-consuming to implement. So instructional designers working in fast-paced corporate environments may simply not have the time or resources to complete the four levels.

Furthermore, many ID experts have called its linear structure into question. While there are workarounds, it does show that the model isn’t a perfect solution.

Another area where it falls short is the type of data it gives you. Sure, it’s great that it tells you if your training is working. But in the case that your training isn’t performing effectively, it doesn’t offer data on where you need to make changes.

Finally, it can be challenging to accurately calculate training ROI using this framework. Therefore, you may need to add another level to your evaluation process to get the final piece of the puzzle.

Key takeaways

The Kirkpatrick Model is a tried-and-tested evaluation approach that gives instructional designers a framework to assess training effectiveness. However, it’s not without its limitations.

Most seasoned instructional designers agree that while the model is still a valuable tool, it needs to be tweaked. Starting at level four and working backward allows you to establish key metrics right away, using these as your North Star throughout the evaluation process.

By Nicola Wylie

Nicola Wylie is a learning industry expert who loves sharing in-depth insights into the latest trends, challenges, and technologies.