In my day, cheating involved scribbling on my arm in smudged ink and secretively hiding it in my sleeve. Then, as we moved online, cheating became centered around hurried Google searches.

But that still took time.

AI is different. AI is instant. AI can write entire essays in less time than it takes to make a brew.

So, what do we do to prevent learners from cheating with this tool?

As instructional designers, we’re swimming in unchartered waters, so let’s explore our options.

What we’re up against

Since ChatGPT and other AI tools exploded onto the scene a little more than two years ago, the learning environment has had a seismic shift.

Some of it is good.

A lot of it is bad.

One of the worst ways that AI is used is to generate “work” that is then passed off as the student’s own.

A study released on July 24 by Wiley explored the problem in depth and revealed some key concerns:

- Almost half of students are using AI, mostly to write essays and papers and to generate ideas.

- 33% of students have admitted that AI makes it easier to cheat.

- 37% of instructors feel that it negatively impacts critical thinking, and another 30% feel it limits learning.

- Over 50% of both students and instructors believe that more students will use AI to cheat in the next three years.

Here’s what we can do about it:

1. Establish firm rules around AI use

You and I both know that using AI to cheat has no benefit for the learner, but that does not mean that AI can’t be used.

For instance, the aforementioned study also revealed that many learners are using AI to understand difficult concepts and for grammar and spellchecking.

For situations like these, AI can be really beneficial and help individuals in a way that is not detrimental to the learning experience.

And we must recognize that AI will only get more prevalent, so we have to work out how to embrace it.

That said, you must establish very clear rules around what is – and what isn’t – acceptable when it comes to the use of AI.

Additionally, students need to understand the consequences if they are found to be using AI the wrong way and the methods you will use to check.

2. Talk about the ethical implications and integrity

Learning experiences are meant to be fair and equal, with everyone acting on a level playing field. AI changes that and tips the balance in the cheater’s favor.

Then, there is the self-deception. Cheating gives individuals a false sense of accomplishment, but that only works until the skills and knowledge have to be applied in the real world.

Then, the shortcomings are quickly revealed when the individual’s lack of learning is finally exposed.

Your job is to highlight these points and make learners realize that cheating will stunt their professional growth and, ultimately, undermine their credibility.

3. Learn what AI content looks like

Despite having firm rules and explanations in place, some people will still resort to using AI to complete their work.

This is where you and the training facilitators have to step in and play detective.

At present, it’s fairly easy to tell if something has been written by AI. ChatGPT in particular has some very telltale signs, especially if the user is not a skilled prompter.

Look for:

- Repetitive language: AI has a habit of repeating things where it isn’t necessary.

- Neutral language: I mean, we are dealing with a robot here. AI tends to lack emotional depth, feeling, and personal insight.

- Flowery vocabulary: AI loves to show off and use overly complex words that humans just don’t use in everyday language.

- Endless lists: AI tends to pump out information in list form. Look for overuse of numbered points or bullet points.

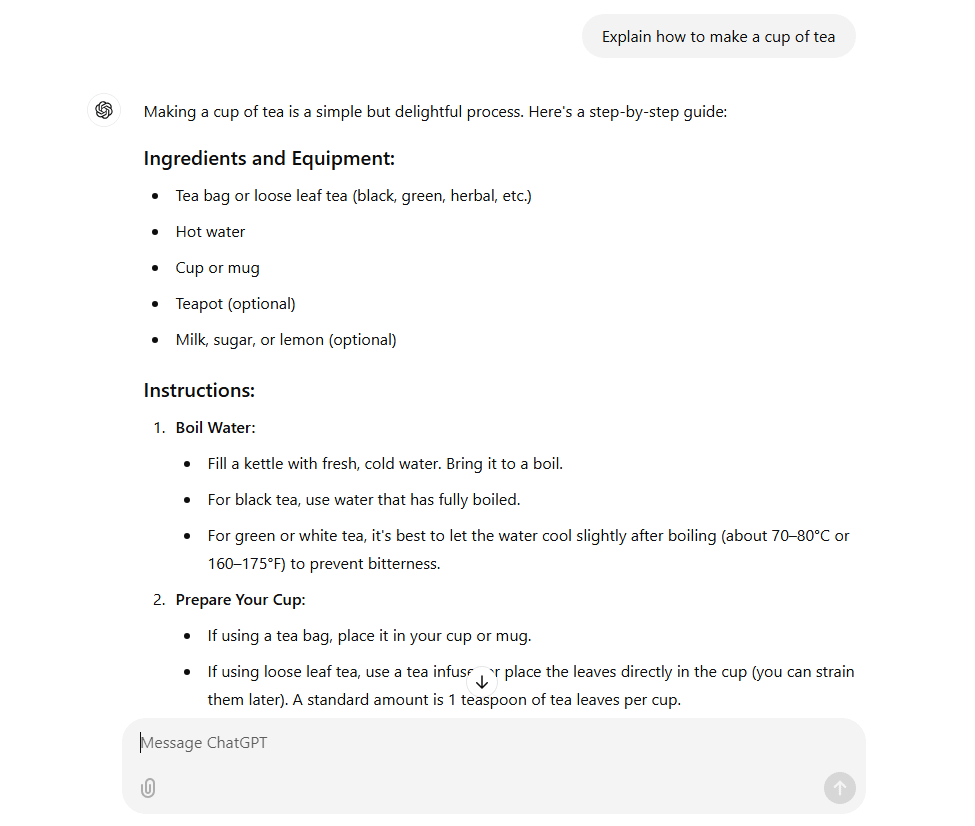

- Extensive explanations: AI likes to go overboard when explaining concepts and will often over-elaborate (ask ChatGPT to explain how to make a cup of tea!).

Overall, you’re looking for a generic writing style where nothing jumps out from the page or particularly excites you.

4. Use opinion and self-reflection

As I have mentioned, AI isn’t opinionated. For instance, I asked ChatGPT about its opinion of the current US president, and it came back with:

“As an AI developed by OpenAI, I don’t possess personal opinions. However, I can provide an overview of the president’s tenure and recent developments.”

Use this to your advantage and create learning assignments that focus on the learner’s personal opinions, experiences, and beliefs.

For example, questions to ask could be:

“Reflect on how this concept applies to your daily life.”

“What is your stance on this topic, and why?”

Individuals should also be required to provide detailed reasoning to support their views, making it even harder to substitute with AI content.

Similarly, students can be asked to reflect on their work or learning processes. Only they know the challenges they faced and what they did to overcome them.

For example:

“What was the most difficult part of this assignment for you, and how did you approach it?”

Ask learners to identify errors and reflect on why they occurred, plus what steps they will take to avoid them in the future.

Regardless of AI, these are all valuable learning experiences. But, crucially, a machine does not easily generate them.

5. Reimagine your assessment strategy

Assessments are going to be one of the first places learners look to cheat in using AI.

Unfortunately, a lot of methods like essays and even coding are vulnerable to cheating. So, your job is to turn assessment on its head and make it significantly harder to game the system.

Personalize assignments

This feeds back into using opinion and reflection. Assignments, where learners draw from their own experiences and insights, are much harder to replicate.

Here are a couple of examples:

Reflect on how the concepts learned in this course apply to your personal career goals.

Analyze this case study, relate it to your local community, and how it will address its challenges.

Scenarios

Scenario-based assessments are designed to mimic real-world situations and challenges. Often, they use complex branching where each choice affects the outcomes.

They typically involve a high level of critical thinking and analysis alongside personal experience and knowledge. And this makes it pretty difficult for AI to come up with an appropriate response.

Also, don’t forget that AI relies on the knowledge available online. A unique scenario may not exist in the ether, which means that the software will not be able to find a pre-existing solution.

Live interviews and questioning

Since e-learning started dominating, online assessment has become the go-to for many training initiatives.

Personally, I believe this is going to change, and we’ll see a return to live assessments – in-person or over a video call.

Why?

Because it’s significantly harder to cheat this way and almost impossible to use AI.

For instance, a person can demonstrate their newfound language skills during a live oral assessment. Or, a facilitator can use interview techniques to assess the level of knowledge gained.

Think of how you can add live assessment to your courses in a way that works for your learners.

Design assignments around higher-order cognitive skills

The simplest forms of assessment – quizzes, summaries, etc. – are the easiest ones to cheat.

Look at developing assessments that ask for higher-order thinking (the top three levels in Bloom’s Taxonomy). These are the ones that require creativity, judgment, and critical analysis, or “cognitive learning.”

Tasks like these require learners to form original ideas or integrate multiple concepts, something that’s currently beyond the capabilities of an AI tool.

6. Use technology to fight AI

For every generative AI model that appears, a tool to fight it also emerges. Picking the right one can help you spot AI content.

AI detection software (but beware of false positives)

Using technology to detect AI-written content can be useful, but it must be used with caution.

False positives (detecting AI when content is original) are very common, and you run the risk of penalizing a learner that didn’t actually cheat.

Free AI detection tools like ZeroGPT tend to be fairly useless. Other paid options like OriginalityAI do better but are still renowned for giving false positives.

Plagiarism tools

AI can have a habit of lifting content from elsewhere online and then displaying it word for word.

Plagiarism tools are very effective for catching this out. The industry-standard tool is Copyscape, but others do just as good a job.

One tip is to pair a plagiarism tool with an AI detection tool and use both sets of results to make a decision.

Browser lockdown apps

These apps restrict online access during online assessments. For instance, they can block certain apps (like ChatGPT) and prevent a user from copy-pasting text.

The obvious downside here is that someone can simply log into an AI tool using a different device and still cheat. Also, there are always workarounds to avoid these things if someone is technically savvy enough.

Secure online testing software

There are dedicated platforms out there like ExamSoft or ProctorU that strictly monitor and control online test environments. For instance, they come with features like identity verification and screen recording.

The software detects suspicious behavior and flags it as such.

Some may find this intrusive, however, and it requires users to have a certain grade of IT equipment for it to be effective.

Final thoughts

Our job isn’t to stamp out the use of AI but rather learn how we can work effectively alongside it. Although an incredible tool, the bottom line is that AI is remarkably generic.

So, if we create learning experiences that minimize the use of AI, doesn’t it stand to reason that our training design will move from bland to extraordinary?

Now that’s definitely food for thought!

By Janette Bonnet

Janette Bonnet is an experienced L&D professional who is passionate about exploring instructional design techniques, trends, and innovations.